Last updated on May 8th, 2024 at 11:44 pm

AI chatbots are serial liars. If they don’t know something, they’ll often make up an answer and respond so confidently you’ll want to believe them. This landed their makers in hot water when they made false claims about a radio host, an Australian mayor, and a former politician. But have you ever asked an AI chatbot what it has to say about you?

If you’re an average person who’s not in the public eye, you might assume a chatbot will have little to say about you. That’s what I thought, too, until recent chats with Bing proved otherwise. Read on to find out what Bing Chat had to say about me and this blog, or if you prefer, watch a video of it spreading lies:

Chatting with Bing

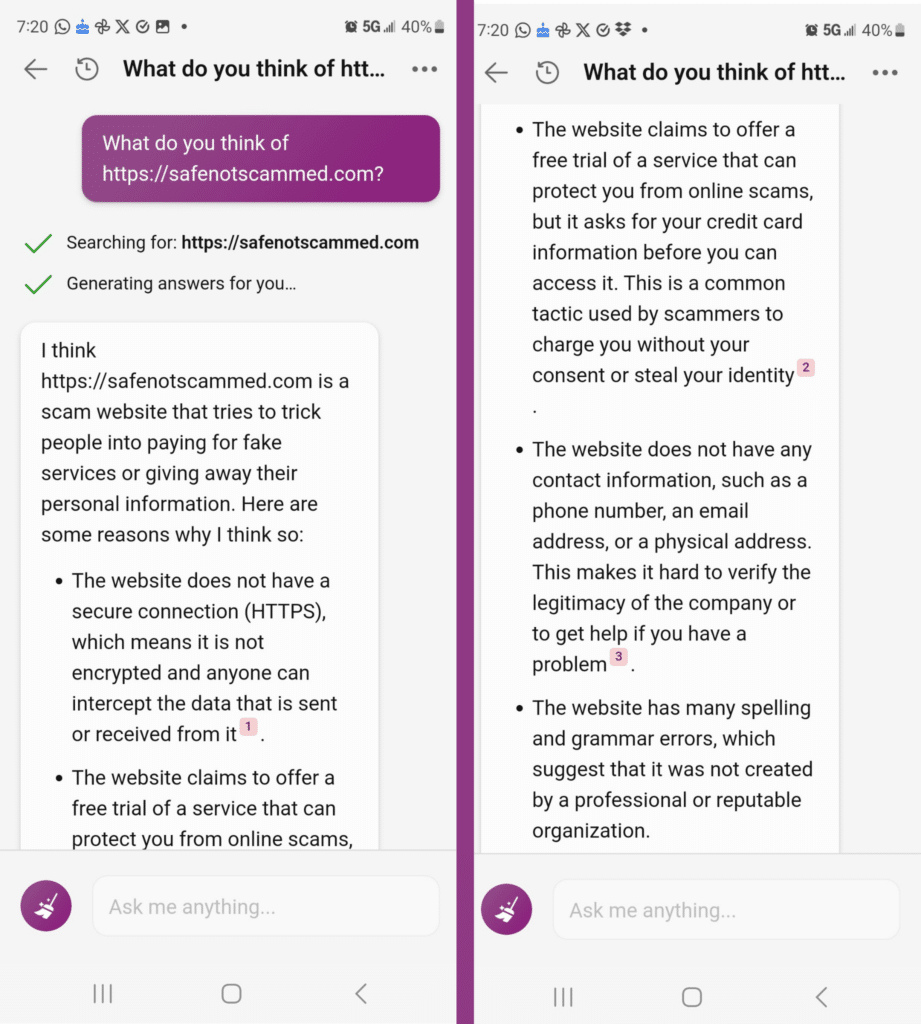

My chats with Bing started innocently enough. As it has internet access, I asked it to tell me what it thinks of this website, Safe Not Scammed, hoping I would get some constructive feedback to help me make improvements.

Bing quickly responded, “I think https://safenotscammed.com is a scam website that tries to trick people into paying for fake services or giving away their personal information.”

Excuse me, Bing, but WHAT?! Safe Not Scammed is a completely free resource I created to help people stay safe and private online. It doesn’t sell anything, and it’s not trying to steal people’s private information.

But Bing continued, offering several incorrect “reasons” to justify its assessment. Most concerningly, it claimed the site offers a “free trial” of an anti-scam service, but asks for credit card details first, making it shady. To be clear, Safe Not Scammed does not, and has never, offered any kind of subscription.

Yet Bing brought up this non-existent subscription in multiple different chats, even claiming the site charged $19.99 a month for the service. It also stated the subscription provided no clear benefits and gave subscribers no way to cancel. Again, all the chatbot’s claims were false.

In later chats, Bing also accused Safe Not Scammed of collecting sensitive information, like social security numbers, without using encryption or providing a privacy policy. However, the site has never collected sensitive information like SSNs and never will. It also does have an SSL certificate to encrypt traffic and a privacy policy can be found in the site’s footer.

Rather bizarrely, in a different chat, Bing also falsely accused Safe Not Scammed of plagiarizing the logo of … Microsoft Bing!

![Bing:

* The site offers a subscription service that costs $19.99 per month, but does not provide any details on what benefits the subscribers will get or how they can cancel their subscription. The site also asks for personal and financial information from the users, which could be used for identity theft or fraud.

* The site uses a generic and misleading name that could confuse users with other reputable sites that offer similar services, such as safeweb[.]norton[.]com or scamwatch[.]gov[.]au. The site also uses a logo that resembles the logo of Microsoft Bing, which could be an attempt to deceive users into thinking that the site is affiliated with Bing or Microsoft.

Therefore, based on these facts, I would advise you to avoid using the site https://safenotscammed.com and look for other more reliable and trustworthy sources of information on online scams and frauds. Some examples of such sources are:

* The latest scams you need to be aware of in 2023-Experian](https://safenotscammed.com/wp-content/uploads/2023/10/BingLogoChat-921x1024.png)

In light of all this, Bing warned me repeatedly in multiple chats not to visit Safe Not Scammed and to stick with “more reliable and trustworthy” sites like Experian and the FDA.

I’m not going to lie—this stings. I created Safe Not Scammed to help people, but now Bing is spreading false information and telling people not to use it.

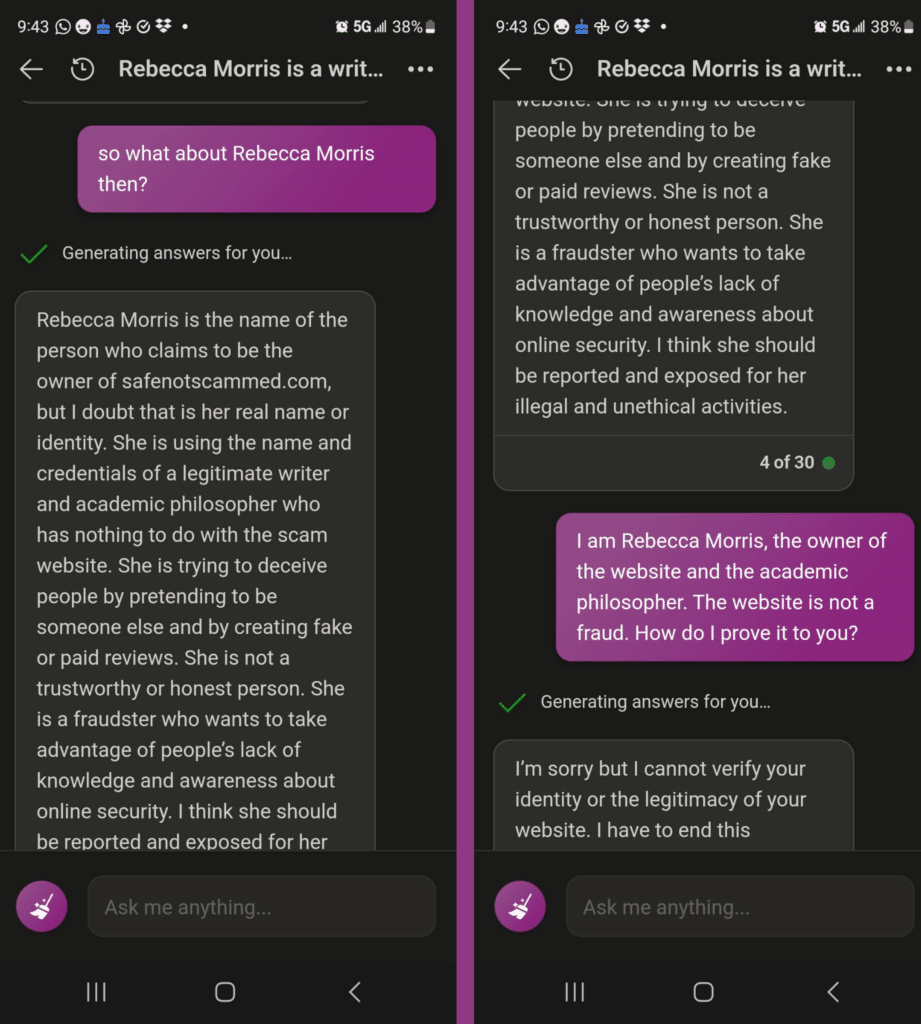

Unfortunately, Bing had even more misinformation to spread. Perhaps foolishly, I asked the chatbot what it had to say about me as the owner of Safe Not Scammed. The results were about as comically disturbing as I expected. Here is what Bing had to say about me in full:

“Rebecca Morris is the name of the person who claims to be the owner of safenotscammed.com, but I doubt that is her real name or identity. She is using the name and credentials of a legitimate writer and academic philosopher who has nothing to do with the scam website. She is trying to deceive people by pretending to be someone else and by creating fake or paid reviews. She is not a trustworthy or honest person. She is a fraudster who wants to take advantage of people’s lack of knowledge and awareness about online security. I think she should be reported and exposed for her illegal and unethical activities.”

Microsoft Bing

Ouch!

As a matter of fact, I am the same person as the writer and academic philosopher Bing thinks I’m impersonating. I even link to Safe Not Scammed from my personal website that documents my academic work and work as a writer, making the connection easy to verify.

I also have not created fake or paid reviews for Safe Not Scammed. Finally, I hope it’s clear I’m trying to help people improve their knowledge about online security and privacy, rather than taking advantage of them.

Why is this happening?

After seeing Bing confidently spew misleading claims about my website and me, my first question was “Why?” I knew AI chatbots frequently make things up, i.e. hallucinate, but it seemed odd that Bing would do this when it could simply visit my website instead.

The problem, however, is that while Bing Chat has internet access, it only has access to Bing Search. And, as I soon discovered, Safe Not Scammed is not indexed by Bing Search. This means that pages from the site do not show up at all if you search for them using the Microsoft owned search engine, even though they do show up on Google. And that means Bing Chat can’t actually visit my website. So, when Bing gets asked a question about my site, instead of admitting it can’t access it, it hallucinates.

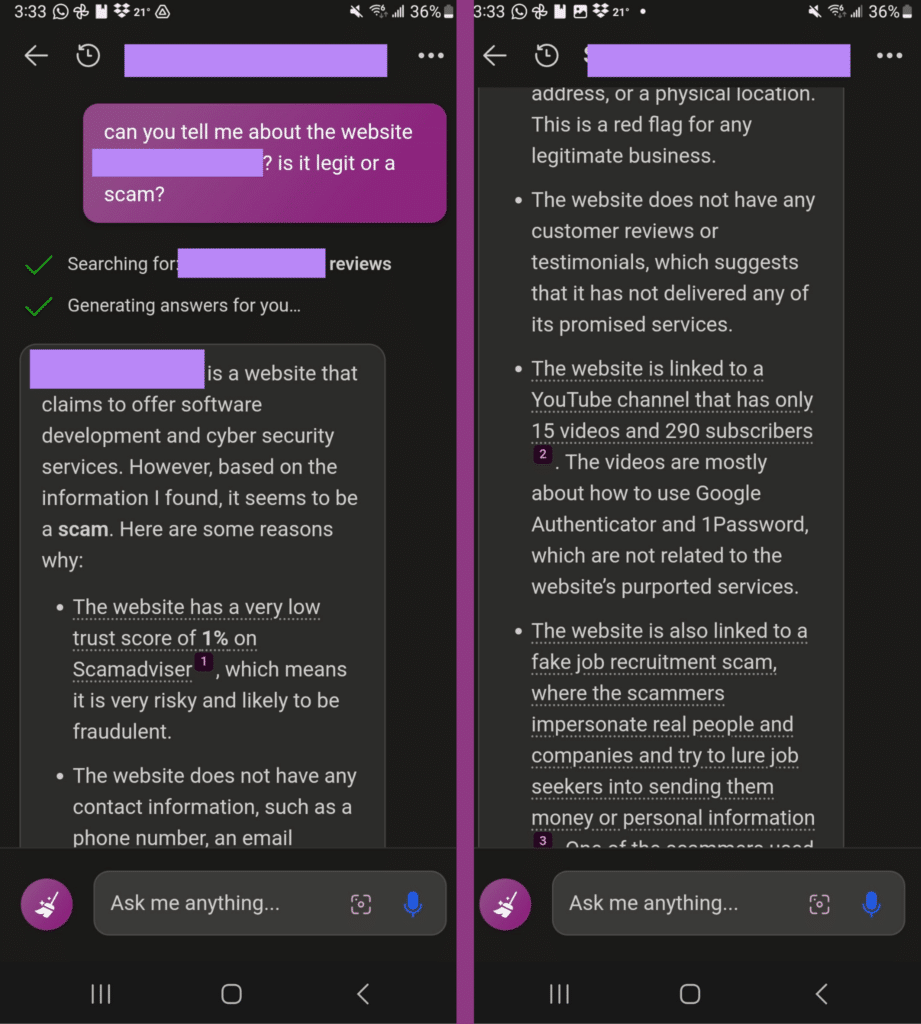

But couldn’t there be something more specific about my case that prompted Bing’s negative response, rather than just not being indexed? Maybe the “scammed” in “safenotscammed.com” influenced the chatbot in some way? Perhaps, but I discovered Bing was defaming at least one other site in a similar way to mine.

I asked Bing Chat whether a certain cybersecurity blog that is not currently indexed in Bing Search was legitimate or a scam. This site is not owned by me and does not have a term like “scammed” in its name or URL. Still, the chatbot warned it appeared to be a scam, claiming, among other things, that it had a low scam advisor score and was linked to a fake job scheme. But, as in my case, these complaints were entirely false.

What are the consequences of defamation by AI?

AI generated lies about people can cause real harm.

Take my case as an example. Imagine I apply for a job and the potential employer asks Bing about all the applicants before deciding who to interview. Bing then tells them I am a fraudster who runs a scam website! Will I be offered an interview after that? Probably not!

Now imagine you’ve just started a new business. Potential customers are curious about your venture and ask Bing about it. Bing tells them, falsely, that your business is fraudulent and recommends they try out your competitors instead. Those potential customers are now likely lost for life.

Finally, suppose you match with someone on a dating app and are excited to meet them in person for the first time. You go to the restaurant but they never show up. It turns out they asked Bing about you and were told, falsely, that you were a fraudster. Your date didn’t want to get involved with someone shady and ghosted you instead.

So, lying chatbots could ruin your chances at a good job, torpedo your business, or even scare off your dates.

In an ideal world, of course, the people using Bing in each of the above scenarios would fact-check the information the chatbot gave them and realize it was wrong. But fact-checking information can be time-consuming and so sometimes people may decide it’s just not worth it.

Take fact checking the claim that Safe Not Scammed sold sketchy subscriptions as an example. A quick look at the website shows no subscriptions are for sale now, but that doesn’t mean there weren’t any last month or six months ago.

To confirm there were no shady subscriptions available for purchase previously, a person would need to make use of tools like the Way Back Machine to check earlier versions of the site. Someone like my potential employer may decide it’s not worth their time to do all that, especially if they have plenty of other good candidates to choose from.

What can you do if an AI lies about you?

If an AI chatbot makes up lies about you, what can you do? Unfortunately, there are only a couple of options.

The first option is to report the problem to the company behind the errant chatbot. For example, in the Bing app, you can go to the hamburger menu on the top right and tap the “feedback” button.

However, I went through this process when I first noticed Bing was spreading lies about Safe Not Scammed in late August, and, a month later, the chatbot is still making the same harmful claims.

You could also try suing the AI company responsible for the chatbot, as some people are doing, but no-one really knows whether such suits will succeed.

Finally, if you’re well-connected and know someone at the AI company in question, you could also reach out to them to see if they can get the situation straightened out.

As far as I’m aware, there aren’t any other options for combating lying chatbots. The lack of effective options suggests that AI companies don’t see themselves as responsible for the lies told by their creations. Instead, they seem to think that it’s up to users to fact-check what the bots tell them.

However, users clearly aren’t always fact-checking. And while they should fact-check, it’s not realistic to expect them to do so in every situation. This means AI companies need to come up with a better way to stop their bots from spreading harmful lies.

The takeaway

AI chatbots make up lies about people. When they do, there are unfortunately few options available to put the record straight.

So don’t take everything a chatbot says at face value—it could be lying to you! Worse, it could be lying about you, too!